By Joseph D’Aleo, CCM, Weatherbell Analytics

The media and warmists in the enviro and academic community have been troubled by the lack of warming the last decade. And Dr. Spencer shows it is in free fall again in October and early November.

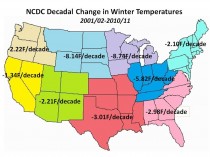

The winters have been especially concerning (PDF) with US winters cooling 4.13F the last decade with cooling in every climate region.

They have latched onto extreme events as proof their climate change thesis. Again these journalists (the term is a stretch nowadays) and global warming scientists have no sense of history.

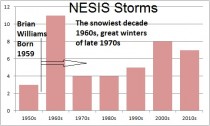

NBC’s Brian Williams in a special report after the Snowtober storm in the northeast said he did not remember weather like this when he grew up. Brian Williams grew up in Elmira, NY, born in 1959 so was a child during the snowy 1960s. He missed the hurricanes of the 1950s although his family lived in New Jersey then. He was a child when the tornado outbreaks of the 1960s and 1970s occurred and was likely in college (where his 18 college credits from Catholic University in DC likely did not include science) while he interned for Jimmy Carter’s administration when the great winters from 1976-1979 occurred.

NOAA has compiled a list of the most impactful eastern snowstorms since 1956 (NESIS). The 1960s is still the most prolific decade even after the flurry of major snows in recent years (something the IPCC, the Union of Concerned Scientists and other advocacy groups said would be become rare not increase)

His $10 million a year salary doesn’t help his memory much I guess or he is one of many people just oblivious to weather.

The 1930s had the greatest most widespread heat and drought, the 1930s to 1950s the most hurricanes, the 1950s to 1970s the most significant tornado outbreaks. There have always been floods and droughts - no trend has been found in either. A new study conducted by federal scientists found no evidence that climate change has caused more severe flooding in the United States during the last century. The U.S. Geological Survey (USGS) study - titled “Has the magnitude of floods across the USA changed with global CO2 levels” - found no clear relationship between the increase in greenhouse gas emissions blamed for climate change and the severity of flooding in three of four regions of the United States.

A draft UN report three years in the making concludes that man-made climate change has boosted the frequency or intensity of heat waves, wildfires, floods and cyclones and that such disasters are likely to increase in the future.

Never mind that all the previous model based forecasts are failing. Temperatures instead of accelerating have flatlined, sea level is falling not rising, snow is increasing not diminishing, the winters are becoming colder not warmer, the global hurricane ACE index is near a 30 year low and on and on.

But lets come back to what we expect to happen. We believe the earth’s climate though locally influenced by man is controlled on the larger scale by changes in solar activity, by decadal oscillations in the oceans and volcanism (even moderate high latirtude ones).

Now in their effort to explain away why warming and sea level rises stopped even as CO2 continued to rise, they are discovering cycles.

“What’s really been exciting to me about this last 10-year period is that it has made people think about decadal variability much more carefully than they probably have before,” said Susan Solomon, an atmospheric chemist and former lead author of the United Nations’ climate change report, during a recent visit to MIT. Though they use these cycles to explain the lack of warming or the cooling the last decade, don’t expect them to to give any credit to them for the warming from 1977 to 1998.

What is ahead...well more extremes but not because of CO2 but because extremes occur when the Pacific is cold and Atlantic warm and when the sun is less active, all currently in progress.

Let me reprise what I wrote in early June about the La Nina relationship to extreme weather.

Would You Believe La Ninas Often Hurt the Economy More Than El Ninos?

There have been great extremes of weather in the last few years. Although many environmentalists and their supporting cast in the media want to blame green house gas related climate change, they are only partially right. Climate is changing…always has and always will. The only constant in nature is change.

The kind of extremes we have experienced are related to a climate change to a cooling planet after a warming period, both associated with 60 year cycles in weather related to multidecadal cycles on the sun and in the oceans.

The last time we underwent such a cooling from the 1950s to 1970s, we had a similar combination barrage of extreme weather with drought, snowstorms, bitter winters, spring tornadoes and floods and hurricanes.

Some of you may remember, in the 1980s and 1990s, before the media’s favorite weather topic was climate change or global warming, it was all El Nino. It was blamed for virtually any weather event that occurred in El Nino years. It is true that El Ninos when strong are capable of producing losses that can total in the billions of dollars. El Ninos are feared in places like Indonesia, Australia, India, Brazil, Mexico, and parts of Africa where devastating droughts are possible. In the United States, southern states from California to Florida are vulnerable to damage from a barrage of strong winter storms.

Here in the United States, the El Nino of 1997/98 played a role in 18 President declared disasters with a total damage exceeding $4 billion.

However, the patterns of weather associated with strong El Nino events also produce many very positive effects and benefits.

For example, the milder temperatures of most strong El Nino winters in the interior northern United States reduce heating costs for both homes and industry and the operating costs for transportation both by air and on land. Less snowfall in the north lowers the costs of snow removal for government and industry, and enables the construction industry to work more during the winter months. Shoppers are able to get to and from stores more easily and often and retail sales benefit. El Nino also typically results in less flooding during the spring and fewer hurricanes in the summer.

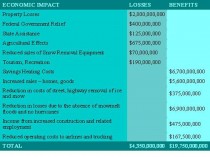

Stan Changnon, former head of the Illinois State Water Survey in the Bulletin of the American Meteorological Society in September, 1999 estimated the economic gains and losses during the great El Nino of 1997/98. He showed that the benefits were much greater than the losses.

LA NINAS ON THE OTHER HAND…

In La Nina, the picture is very different from that of El Nino. When periodic outbreaks of extreme cold weather and snow occur across the northern states, the costs of heating, snow removal, fuel for airline and trucking industries can become at least regionally significant. As snowstorms hit northern areas and snow and ice storms occur across the south or east, business may be shut down for days with major effects on commerce. Retail sales may be down due to travel difficulties. Construction work will be hampered with delays and loss of employment.

Also in La Ninas, losses from springtime flooding and from droughts and hurricanes typically are much greater than normal. Flooding in La Nina years averages nearly $4.5 billion compared to an average of $2.4 billion. We have already seen examples of that in 2008 and 2009 in Missouri and Arkansas and this year in the Ohio, Mississippi (worst since 1927), Champlain and now Missouri River Valleys.

Major tornado outbreaks occurred in January 1999 in Arkansas and Tennessee and in May in Oklahoma and Kansas with $2.3 billion in damages. And of course the super tornado outbreak of April 1974 with its 148 tornadoes that left 315 dead and 500 injured occurred during a very strong La Nina. And in the la nina year of 1967, there was the Palm Sunday outbreak. In 2008, we set a record for tornadoes in May, this year in April. The outbreaks this year focused more on the major urban centers with greater resulting damage and death toll.

A major heat wave and drought in the strong La Nina of 1988 caused an estimated $40 billion in damage or losses (mostly agricultural) in the central and eastern United States. 2010’s summer La Nina brought record heat like 1988 but not the drought because the winter had been so snowy and wet and the soils saturated. This winter and spring drought in the southern plains is very costly. In Texas it was the third worst May drought behind only 1918 and 1956.

Hurricane related losses in La Nina years, averages $5.9 billion compared to an all year average of $3 billion. In fact, most all the major east coast hurricanes have been in La Nina years especially those with a warm Atlantic as we have currently.

SOME BENEFITS TOO

On the other hand, the winter sports industry may benefit in the west and north from increased snowfall. The last three La Nina years have been boon years Though the northern tier pays more for energy costs with cold winters and the occasional hot summer, many others save on their energy bills. This past winter was unusually cold in Florida in December into January but spring warmth followed early.

Warmer than normal temperatures in the big cities of the east and south in most La Ninas may save consumers there billions through reduced heating costs. Tourism in ‘escape’ destinations like Florida and California is usually up. Sales of snow removal equipment and winter clothing are also higher in the north and west.

But unlike El Ninos, the benefits are often dwarfed by the losses.

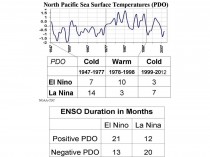

TIES TO THE PACIFIC DECADAL OSCILLATION

The frequency of both El Ninos and La Ninas is tied to the PDO. When the PDO is warm (positive) more El Ninos are favored and when negative La Ninas. The PDO phases average about 25-30 years in length. Though the PDO is a measure of conditions from 20N to the North Pacific, it turns out that warm water is favored in the tropics during the warm phase and cool water in the cool phase. This is what predisposes the Pacific towards an El Nino or La Nina state.

It appears the PDO which flirted with a change to negative in the late 1990s has returned solidly to a negative cold regime state. This is consistent with the 25-30 year phase length as the last shift called the Great Pacific Climate Shift occurred around 1977. If indeed it stays there this time, we can expect more La Ninas like this one in the years ahead just as we found in the last cold phase from 1947 to 1977. If that is the case and we see more La Ninas like this year, look for

(1) With more La Ninas than El Ninos, declining global temperatures especially as the Atlantic cools and during quiet solar periods or when volcanism increases

(2) More cold and snow across the northern tier from the Pacific Northwest and Northern plains to the Great Lakes and New York and New England. Ice storms are also more frequent on the southern end of the snow bands. they can be just as devastating as the early heavy snowstorm before the deciduous trees shed their leaves the last week. Recall 1973, 1974, 1997, 2008 for some recent examples in the northeast.

(3) Western snowpack increases. Scientists have measured new ice in Montana’s Glacier National Park and atop Colorado’s Front Range mountains. In northwest Wyoming, there is photographic evidence of snowfield growth after Bob Comey, director of the Bridger-Teton National Forest Avalanche Center, compared photos of peaks from year to year. His images taken before snow started falling again this autumn show what appears to be significantly more ice in the Teton Range compared with two years ago. Last spring, record snow depths and avalanches around Jackson Hole gave way to concern about possible flooding, but fairly cool weather kept much of the snow right where it was. The flooding that did occur, at least in Wyoming, was less severe than feared. “I’ve never seen a season with a gain like we’ve seen this summer,” Comey said.

(4) More winters with below normal snow Mid-Atlantic south. The occasional one year El Ninos will bring heavy snows to the south and east and be colder than the El Ninos in the warm PDO

(5) More late winter and spring floods from spring storms and snowmelt

(6) Dry winters and early springs in Arizona, Texas and Florida with spring brush fires and dust storms in the desert

(7) More tornado outbreaks and stronger tornadoes in the spring months with focus from the western Gulf to Missisissippi and Ohio Valley instead of Tornado Alley.

(8) More Atlantic hurricanes threatening the east coast from Florida north, especially as long as the Atlantic stays warm (Atlantic usually lags up to a decade or so after the Pacific in its multidecadal cycles).

(9) Greater chances of winter and following growing season drought in the major growing areas especially when La Ninas come on after an El Nino winter

SUMMARY

Some extremes of weather have been increasing even as the earth cools. But the causes are natural. La Ninas produce more winter cold and snows, more severe weather from fall through spring, more springtime flooding and summer droughts and heat waves, enhanced landfall hurricane threats with greater economic impact and declining global temperatures. Given the flip of the Pacific Decadal Oscillation to the cold mode which favors more La Ninas like this one, we may be looking back at recent decades when El Ninos dominated as the good old days when weather was unusually benign and favorable.

Please join us at weatherbell.com for daily posts by Joe Bastardi and I on forecast weather and climate. We forecast the devastating wet snowstorm’s effects many days in advance. JB appeared on FOX Business last Thursday to alert the viewers to devastating power outages. We had forecast the drought in the south, the floods in the north and Ohio/Mississippi Valleys, the tornado outbreaks, a landfalling hurricane on the east coast (even forecast NYC as target). Join us and see what we have in mind for global weather this upcoming winter (summer southern hemisphere).

By Roger Pielke Sr.

A news article appeared today titled

‘Snowtober’ fits U.N. climate change predictions

The article starts with the text

While the Northeast is still reeling from a surprise October snowstorm that has left more than a million people without power for days, the United Nations is about to release its latest document on adaptation to climate change.

The report from the Intergovernmental Panel on Climate Change is expected conclude that there is a high probability that man-made greenhouse gases already are causing extreme weather that has cost governments, insurers, businesses and individuals billions of dollars. And it is certain to predict that costs due to extreme weather will rise and some areas of the world will become more perilous places to live.

The historic snowstorm in the northeast United States a few days ago was due to an unusually far south Polar jet stream for this time of the year, and colder than average temperatures associated with the east coast storm. To claim that this event fits with the IPCC climate change predictions is ridiculous. The article reads as an op-ed in the guise of a news article.

Steve Goddard responds:

Here are the actual IPCC forecasts

2001 15.2.4.1.2.4. Ice Storms: “Milder winter temperatures will decrease heavy snowstorms” IPCC Draft 1995

And from the east coast - New York Times shrinking snow cover in winter.

----------------

The CO2 greenhouse warming theory is flawed. Not only has warming stopped in the atmosphere and ocean but the oceans can absorb readily anything we throw into the air. CO2 is a trace gas already. the oceans have an infintite capacity to remove it from the air when coolling accelerates.

Also note how in this experiment, Geologist Tom Segalstad shoots down the long lifetime for atmospheric CO2 assumed by the IPCC and their tinkertoy climate models.

An eloquent experiment by geologist Tom Segalstad, dramatic proof of the rapid absorption of CO2 by water. The calcium carbonate touch is inspired!

Segalstad explains:

This video shows that a candle floating on water, burning in the air inside a glass, converts the oxygen in the air to CO2. The water rises in the glass because the CO2, which replaced the oxygen, is quickly dissolved in the water. The water contains calcium ions Ca++, because we initially dissolved calcium hydroxide Ca(OH)2 in the water. The CO2 produced during oxygen burning reacts with the calcium ions to produce solid calcium carbonate CaCO3, which is easily visible as a whitening of the water when we switch on a flashlight. This little kitchen experiment demonstrates the inorganic carbon cycle in nature.

The oceans take out our anthropogenic CO2 gas by quickly dissolving it as bicarbonate HCO3-, which in turn forms solid calcium carbonate either organically in calcareous organisms or precipitates inorganically. The CaCO3 is precipitating and not dissolving during this process, because buffering in the ocean maintains a stable pH around 8. We also see that CO2 reacts very fast with the water, contrary to the claim by the IPCC that it takes 50 - 200 years for this to happen. Try this for yourself in your kitchen! (OR CLASSROOM).

Greenpeace Exposed!

October 31, 2011 11:36 A.M. By Greg Pollowitz

The Consumers Alliance for Global Prosperity has a new video out exposing Greenpeace and how Greenpeace’s environmentalism actually hurts the developing world. I don’t know who they got to do the voice in the vid, but he’s pretty scary. Perfect for Halloween today. Enjoy:

----------------------------

You Cannot have it Both Ways

By Harold Ambler

I spent 25 years of my life worried about global warming. And one of the best proofs that the scientists and the media both had to keep me convinced was warmer winters, with less snow. Al Gore talked about it. Robert F. Kennedy Jr. wrote about it. Dozens of scientists published papers showing that winters were getting warmer, with less snow.

Well, in the midst of this kind of certainty about warmer winters, with less snow, some scientists, among them a Russian named Habibullo Abdussamatov, dared to question the idea that warmer winters, with less snow, were caused by carbon dioxide in the first place and also questioned whether Earth would continue to warm during the next few decades.

Abdussamatov and the rest of the skeptical scientists were widely ridiculed, even condemned. Corrupt, sub-human, blind, people called them. And worse.

They were none of these things.

Then, a funny thing started happening a couple of autumns ago. First, significant early-season snow events began to materialize during fall. Second, winters in the Northern Hemisphere started to show characteristics of the winters that Al Gore and RFK Jr. said they missed so much.

But now, in our era of terror of weather, the return to colder winters, with more snow, has been twisted by the same people who missed the colder winters, with more snow, of their youth. These new cold winters, with more snow, were not evidence of natural climate cycles, they said. The snow was, in fact, caused by global warming. This is what they said. And I have to believe that it is what they meant.

But it cannot continue. The science claiming that the unfolding transition to colder winters, with more snow, is proof of global warming is bad science. And turning an entire generation of people into nature-fearers is a grave sin.

The ocean-atmosphere system did not used to sit in benevolent stasis. Sea level did not used to remain ever stable. Droughts are not a product of modernity, and they are not increasing in number. And early-season snowstorms like the one unfolding in the Northeast were never proof of global warming. Not when they happened in the past, and not now.

To Mr. Gore, and his still passionate supporters, I say this: You cannot have it both ways. You cannot count the absence of snow as proof of your theories and the presence of snow as the proof of your theories.

We’re smarter than that. And you’re going have to do better, if you wish to win this debate.

See post here.

Halloween is this weekend or Monday. Already before Halloween, two snowstorms have hit the east bringing record October snows. New York City’s snow is greatest for October since records began in 1869.

The excuse they used the last few years was that the snow and cold was the result of strong blocking high pressure in the arctic pushing cold air south. Well that is NOT currently hapoening. So how does one explain the cold and snow??? Or the fact that winters have cooled 4.13F the last decade for the lower 48 states. Every region has cooled.

PDF of all regions.

By Roger Pielke Jr., Roger Pielke Jr.’s Blog

Here is another good example why I have come to view parts of the climate science research enterprise with a considerable degree of distrust.

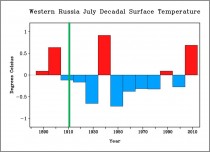

A paper was released yesterday by PNAS, by Stefan Rahmstorf and Dim Coumou, which asserts that the 2010 Russian summer heat wave was, with 80% probability, the result of a background warming trend. But if you take a look at the actual paper you see that they made some arbitrary choices (which are at least unexplained from a scientific standpoint) that bias the results in a particular direction.

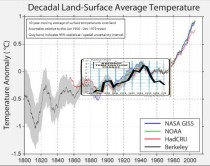

Look at the annotated figure above (enlarged), which originally comes from an EGU poster by Dole et al. (programme here in PDF). It shows surface temperature anomalies in Russia dating back to 1880. I added in the green line which shows the date from which Rahmsdorf and Coumou decided to begin their analysis—1911, immediately after an extended warm period and at the start of an extended cool period.

Obviously, any examination of statistics will depend upon the data that is included and not included. Why did Rahmsdorf and Coumou start with 1911? A century, 100 years, is a nice round number, but it does not have any privileged scientific meaning. Why did they not report the sensitivity of their results to choice of start date? There may indeed be very good scientific reasons why starting the analysis in 1911 makes the most sense and for the paper to not report the sensitivity of results to the start date. But the authors did not share that information with their readers. Hence, the decision looks arbitrary and to have influenced the results.

Climate science—or at least some parts of it—seems to have devolved into an effort to generate media coverage and talking points for blogs, at the expense of actually adding to our scientific knowledge of the climate system. The new PNAS paper sure looks like a cherry pick to me. For a scientific exploration of the Russian heat wave that seems far more trustworthy to me, take a look at this paper.

Posted on October 25, 2011 by Steven Goddard

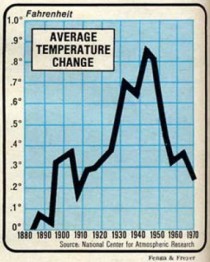

In 1975, NCAR generated this graph of global cooling. Temperatures plummeted from 1945 to at least 1970.

In 2011, Richard Muller published this graph, showing that it never happened.

Below is an overlay at the same scale. The cooling after 1950 has disappeared. Winston Smith would be proud!

Dr. Don J. Easterbrook, Western Washington University, Bellingham, WA

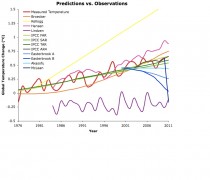

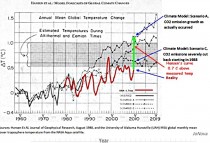

The October 18, 2011 post on Skeptical Science entitled “How Global Temperatures Predictions Compare to What Happened (Skeptics Off Target)”by ‘Dana 1981’ claimed that “the IPCC projections have thus far been the most accurate” and “mainstream climate science predictions… have mostly done well.... and the “skeptics” have generally done rather poorly.” “"several skeptics basically failed, while leading scientists such as Dr. James Hansen (a regular climate activist, as well as the top climatologist at NASA) and those at the IPCC did pretty darn well.” Figure 1 shows a graphical comparison of predictions vs. observations as portrayed by Dana1981.

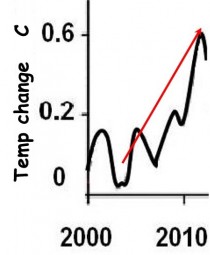

However, the graph and these statements seemed to fly in the face of data, which show just the opposite - that computer models have failed badly in predicting temperatures over the past decade. So how could anyone make these claims? Figure 2 shows the IPCC temperature predictions from 2000 to 2011, taken from the IPCC website in 2000. Note that their projection is for warming of 0.6C (1.1F) between 2003 and now.

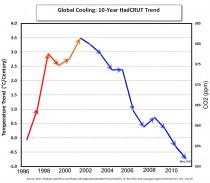

How close is the IPCC projected warming to the measured temperatures? Figure 3 shows a 10-year cooling trend, so how can the computer-model projected warming, (0.6C, 1.1 F) warming be considered accurate?

What’s wrong with Figure 1?

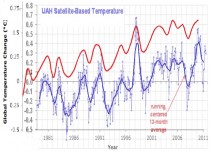

The comparison of various predicted temperature curves and what is labeled “Measured temperature” in Figure 1 is definitely at odds with Figures 2 and 3. How can the IPCC computer model predictions of a full degree of warming (F) (0.6 C) in the past decade and temperature predictions of Hansen and Broecker be consistent with no global warming in the past decade (in fact, slight cooling)? Something is badly off base here. One possibility is that the ‘Measured temperature’ curve in Figure 1 is too high, taking it into the temperatures of model predictions, so I took the UAH satellite temperature curve from 1979 to Sept. 2011 (available at http://www.drroyspencer.com/latest-global-temperatures/) and dropped it onto Figure 1. The result (Figure 4) was rather startling. What is immediately apparent is that the temperatures plotted in Figure 1 are nearly half a degree C higher than the UAH temperatures! No source is given for the red temperature curve in Figure 1, but it seems to generally mimic the UAH temperatures, rather than ground-measured temperatures.

Professor Muller of Berkeley with funding from many diffferent groups undertook a reevaluation of global temperature trends.The results predictably since he appears to have worked with much of the same raw data all three global data centers used or started with, found little had changed. Even before the peer review process on four papers in progress was complete, the project jumped the gun on releasing the results. The media equally predictably proclaimed it the deathknell for skepticism. There were a number of responses you should read before you prematurely jump to the same conclusion.

Roger Pielke Sr. on Climate Science:

On Climate Etc, Judy Curry posted

Berkeley Surface Temperatures: Released

which refers the Economist article “new analysis of the temperature record leaves little room for the doubters. The world is warming”

The Economist article includes the text

There are three compilations of mean global temperatures, each one based on readings from thousands of thermometers, kept in weather stations and aboard ships, going back over 150 years. Two are American, provided by NASA and the National Oceanic and Atmospheric Administration (NOAA), one is a collaboration between Britain’s Met Office and the University of East Anglia’s Climate Research Unit (known as Hadley CRU). And all suggest a similar pattern of warming: amounting to about 0.9C over land in the past half century.

The raw surface temperature data from which all of the different global surface temperature trend analyses are derived are essentially the same...it is no surprise that his trends are the same for GISS, CRU and NCDC but we do not know if it is true or not for Muller et al. His claim is that they draw from a much larger set of data. Here is what I wrote on several weblogs.

What I would like to see is a map with the GHCN sites and with the added sites from Muller et al. The years of record for his added sites should be given. Also, were the GHCN sites excluded when they did their trend assessment? If not, and the results are weighted by the years of record, this would bias the results towards the GHCN trends.

The evaluation of the degree of indepenence of the Muller et al sites from the GHCN needs to be quantified.

I discussed this most recently in my post: “Erroneous Information In The Report “Procedural Review of EPA’s Greenhouse Gases Endangerment Finding Data Quality Processes”

The new Muller et al study, therefore, has a very major unanswered question. I have asked it on Judy’s weblog since she is a co-author of these studies [and Muller never replied to my request to answer this question].

Hi Judy – I encourage you to document how much overlap there is in Muller’s analysis with the locations used by GISS, NCDC and CRU. In our paper

Pielke Sr., R.A., C. Davey, D. Niyogi, S. Fall, J. Steinweg-Woods, K. Hubbard, X. Lin, M. Cai, Y.-K. Lim, H. Li, J. Nielsen-Gammon, K. Gallo, R. Hale, R. Mahmood, S. Foster, R.T. McNider, and P. Blanken, 2007: Unresolved issues with the assessment of multi-decadal global land surface temperature trends. J. Geophys. Res., 112, D24S08, doi:10.1029/2006JD008229.

we reported that

“The raw surface temperature data from which all of the different global surface temperature trend analyses are derived are essentially the same. The best estimate that has been reported is that 90–95% of the raw data in each of the analyses is the same (P. Jones, personal communication, 2003).” Unless, Muller pulls from a significanty different set of raw data, it is no surprise that his trends are the same.

Anthony Watts in post The Berkeley Earth Surface Temperature project puts PR before peer review.

Readers may recall this post last week where I complained about being put in a uncomfortable quandary by an author of a new paper. Despite that, I chose to honor the confidentiality request of the author Dr. Richard Muller, even though I knew that behind the scenes, they were planning a media blitz to MSM outlets. In the past few days I have been contacted by James Astill of the Economist, Ian Sample of the Guardian, and Leslie Kaufman of the New York Times. They have all contacted me regarding the release of papers from BEST today.

There’s only one problem: Not one of the BEST papers have completed peer review.

Nor has one has been published in a journal to my knowledge, nor is the one paper I’ve been asked to comment on in press at JGR, (where I was told it was submitted) yet BEST is making a “pre-peer review” media blitz.

One willing participant to this blitz, that I spent the last week corresponding with, is James Astill of The Economist, who presumably wrote the article below, but we can’t be sure since the Economist has not the integrity to put author names to articles.

In an earlier post, Anthony reported:

Thorne said scientists who contributed to the three main studies -by NOAA, NASA and Britain’s Met Office -welcome new peer-reviewed research. But he said the Berkeley team had been “seriously compromised” by publicizing its work before publishing any vetted papers. - Peter Thorne, National Climatic Data Center in Asheville, N.C.

He went on to report even IPCC Lead Author and alarmist Kevin Trenberth had his doubts:

Even Trenberth isn’t too sure about it:

Kevin Trenberth, who heads the Climate Analysis Section of the National Center for Atmospheric Research, a university consortium, said he was “highly skeptical of the hype and claims” surrounding the Berkeley effort. “The team has some good people,” he said, “but not the expertise required in certain areas, and purely statistical approaches are naive.”

Lubos Motl The Reference Frame Berkeley Earth recalculates global mean temperature, gets misinterpreted:

Berkeley Earth Surface Temperature led by Richard Muller – a top Berkeley physics teacher and the PhD adviser of the fresh physics Nobel prize winner Saul Perlmutter, among others - has recalculated the evolution of the global mean temperature in the most recent two centuries or so, qualitatively confirmed the previous graphs, and got dishonestly reported in the media.

Some people including Marc Morano of Climate Depot were predicting that this outcome was the very point of the project. They were worried about the positive treatment that Richard Muller received at various places including this blog and they were proved right. Today, it really does look like all the people in the “BEST” project were just puppets used in a bigger, pre-planned propaganda game.

Marc Morano of Climate Depot in Befuddled Warmist Richard Muller Declares Skeptics Should Convert to Believers Because His Study Shows the Earth Has Warmed Since the 1950s!

‘Warming now equals human causation?! Muller should be ashamed of himself for promoting media spin like this’—‘Muller’s study is already being met with massive scientific blowback from his colleagues’.

Enlarged.

Figure 1. Temperature predictions vs. observations as portrayed by Dana1981. Enlarged.

Figure 2. IPCC global warming prediction from the IPCC website in 2000. Enlarged.

Figure 3. Ten-year cooling trend from 2001 to 2011. Enlarged.

Figure 4. Comparison of UAH satellite global temperatures (blue curve) with temperatures plotted in Figure 1 (red curve). Enlarged.

Figure 5 corroborates that Hansen’s 1988 temperature curve is 0.7C higher than the UAH measured temperature curve. By no stretch of the imagination can his prediction be considered ‘accurate!’Enlarged.

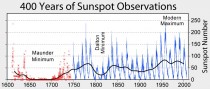

As for the skeptics predictions, mine were based on recurring climate cycles that can be traced back at least 400 years, long before CO2 could have been a factor. Using the concept that’‘the past is the key to the future,’ I simply continued the past, well-documented temperature patterns into the future and offered several possible scenarios beginning about 2000: (1) moderate, 25-30-year cooling similar to the 1945 to 1977 cooling, (2) more intense, 25-30-year cooling, similar to the more severe cooling from 1880 to 1915, (3) more severe, 25-30-year cooling similar to the Dalton Minimum cooling from 1790 to 1820; or very severe cooling (the Little Ice Age) similar to the Maunder Minimum cooling from 1650 to 1700. So far, my cooling prediction, made in 1998, appears to be happening and is certainly far more accurate than any of the model predictions, which called for warming of a full degree F. So far cooling this past decade has been moderate, more like the 1945-1977 cooling, but as we get deeper into the present Grand Solar Minimum, the cooling trend may become more intense.

rpielke says:

October 21, 2011 at 6:22 am

Zeke Hausfather – I realize that he has a much larger set of locations, but many of them are very short term in duration (as I read from his write up). Moreover, if they are in nearly the same geographic location as the GHCN sites, they are not providing much independent new information.

See editorial and summary.

Nigel Calder in Calder’s Updates reacts this way:

Nice research, curious rhetoric. Just dis-embargoed at noon PST (8 pm BST) on 20 October are a press release and associated papers from the Berkeley Earth Surface Temperatures (BEST) project. A team led by Richard A. Muller has been asking whether the histories of land surface temperatures from the likes of NOAA, NASA and the Hadley Centre are to be trusted. Clever statistics glean and process raw data from 39,000 weather stations world wide - more than five times as many as used by other analysts.

...The BEST study, based on a random selection of weather stations, you’ll see that the average temperatures of the land correspond quite well with the other series.

What’s very odd is the rhetoric of the press release. It begins:

Global warming is real, according to a major study released today. Despite issues raised by climate change skeptics, the Berkeley Earth Surface Temperature study finds reliable evidence of a rise in the average world land temperature of approximately 1C since the mid-1950s.

Global warming real? Not recently, folks. The black curve in the graph confirms what experts have known for years, that warming stopped in the mid-1990s, when the Sun was switching from a manic to a depressive phase.

Elsewhere the press release first begs the question by calling the past 50 years “the period during which the human effect on temperatures is discernible” and then contradicts this by saying, “What Berkeley Earth has not done is make an independent assessment of how much of the observed warming is due to human actions, Richard Muller acknowledged.”

Let me say there is interesting stuff in the material released today, particularly in the paper on Decadal Variations, tracing links with El Nino and other regional temperature oscillations - a subject I may return to when I have more time.

It hasn’t escaped my attention that BEST is today gunning mainly for Anthony Watts and his Surface Stations project in the USA, but he’s well capable of answering for himself.

See also James Delingpole on the Muller releases.

By Joseph D’Aleo, Weatherbell.com

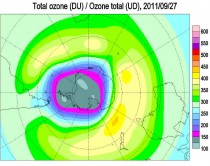

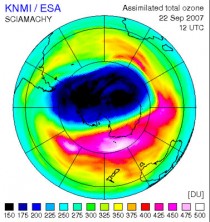

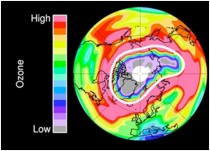

The ozone hole above the Antarctic has reached its maximum extent for the year, revealing a gouge in the protective atmospheric layer that rivals the size of North America, scientists have announced.

Spanning about 9.7 million square miles (25 million square kilometers), the ozone hole over the South Pole reached its maximum annual size on Sept. 14, 2011, coming in as the fifth largest on record. The largest Antarctic ozone hole ever recorded occurred in 2006, at a size of 10.6 million square miles (27.5 million square km), a size documented by NASA’s Earth-observing Aura satellite.

The Antarctic ozone hole was first discovered in the late 1970s by the first satellite mission that could measure ozone, a spacecraft called POES and run by the National Oceanic and Atmospheric Administration (NOAA). The hole has continued to grow steadily during the 1980s and 90s, though since early 2000 the growth reportedly leveled off. Even so scientists have seen large variability in its size from year to year.

On the Earth’s surface, ozone is a pollutant, but in the stratosphere it forms a protective layer that reflects ultraviolet radiation back out into space, protecting us from the damaging UV rays. Years with large ozone holes are now more associated with very cold winters over Antarctica and high polar winds that prevent the mixing of ozone-rich air outside of the polar circulation with the ozone-depleted air inside, the scientists say.

There is a lot of year to year variability, in 2007, the ozone hole shrunk 30% from the record setting 2006 winter.

The record setting ozone hole in 2006 (animating here).

In 2007, it was said: “Although the hole is somewhat smaller than usual, we cannot conclude from this that the ozone layer is recovering already,” said Ronald van der A, a senior project scientist at the Royal Dutch Meteorological Institute in the Netherlands.

This year, the ozone region over Antarctica dropped 30.5 million tons, compared to the record-setting 2006 loss of 44.1 million tons. Van der A said natural variations in temperature and atmospheric changes are responsible for the decrease in ozone loss, and is not indicative of a long-term healing.

“This year’s (2007) ozone hole was less centered on the South Pole as in other years, which allowed it to mix with warmer air,” van der A said. Because ozone depletes at temperatures colder than -108 degrees Fahrenheit (-78 degrees Celsius), the warm air helped protect the thin layer about 16 miles (25 kilometers) above our heads. As winter arrives, a vortex of winds develops around the pole and isolates the polar stratosphere. When temperatures drop below -78C (-109F), thin clouds form of ice, nitric acid, and sulphuric acid mixtures. Chemical reactions on the surfaces of ice crystals in the clouds release active forms of CFCs. Ozone depletion begins, and the ozone “hole” appears.

Over the course of two to three months, approximately 50% of the total column amount of ozone in the atmosphere disappears. At some levels, the losses approach 90%. This has come to be called the Antarctic ozone hole. In spring, temperatures begin to rise, the ice evaporates, and the ozone layer starts to recover.

Intense cold in the upper atmosphere of the Arctic last winter activated ozone-depleting chemicals and produced the first significant ozone hole ever recorded over the high northern regions, scientists reported in the journal Nature.

This year, for the first time scientists also found a depletion of ozone above the Arctic that resembled its South Pole counterpart. “For the first time, sufficient loss occurred to reasonably be described as an Arctic ozone hole,” the researchers wrote.

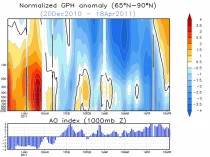

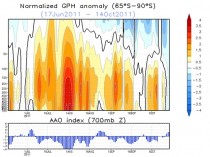

It was related to a rebound cooling of the polar stratosphere and upper troposphere. Notice the December and early January warmth and VERY NEGATIVE AO and the pop of the AO and rapid cooling starting in January.

The Antarctic after a record negative polar warming, turned colder in mid to late winter (starting in late August).

Also note the scientists mentioning the sulfuric acid mixture’s role in the ozone destruction. Sulfate aerosols are associated with volcanism and the recent high latitude volcanoes in Alaska, Iceland and Chile may have contributed to the blocking (warming). Like a pendulum, a swing to one state, can result in a rebound to the opposite extreme very obvious in the arctic.

The data shows a lot of variability and no real trends after the Montreal protocol banned CFCs. The models had predicted a partial recovery by now. Later scientists adjusted their models and pronounced the recovery would take decades. It may be just another failed alarmist prediction.

Remember we first found the ozone hole when satellites that measure ozone were first available and processed (1985). It is very likely to have been there forever, varying year to year and decade to decade as solar cycles and volcanic events affected high latitude winter vortex strength. PDF.

by Anthony Watts

I had to laugh after reading the reviews on Amazon.com for Donna Laframboise’s book: The Delinquent Teenager Who Was Mistaken for the World’s Top Climate Expert.

There’s some double fun here, because the title reminds me of the language used in the 1 star review given by Dr. Peter Gleick of the Pacific Institute.

The first fun part: Gleick apparently never read the book before posting a negative review, because if he had, he wouldn’t be intellectually slaughtered by some commenters who challenge his claims by pointing out page and paragraph in the book showing exactly how Gleick is the one posting false claims. You can read the reviews here at Amazon, and if you’ve bought the book and have read it, add your own. If you haven’t bought it yet, here’s the link for the Kindle edition. Best $4.99 you’ll ever spend. If you don’t own a Kindle you can read this book on your iPad or Mac via Amazon’s free Kindle Cloud Reader - or on your desktop or laptop via Kindle for PC software.

The other fun part? Gleick apparently doesn’t realize he’s up against a seasoned journalist, he thinks Donna is just another “denier”. Another inconvenient truth for Gleick is that she was a member of the board of directors of the Canadian Civil Liberties Association - serving as a Vice-President from 1998-2001.

Read Gleick’s critique and rebuttal comments by those who actually read the book here.

By Matt Ridley, The Rational Optimist

Which would you rather have in the view from your house? A thing about the size of a domestic garage, or eight towers twice the height of Nelson’s column with blades noisily thrumming the air. The energy they can produce over ten years is similar: eight wind turbines of 2.5-megawatts (working at roughly 25% capacity) roughly equal the output of an average Pennsylvania shale gas well (converted to electricity at 50% efficiency) in its first ten years.

Difficult choice? Let’s make it easier. The gas well can be hidden in a hollow, behind a hedge. The eight wind turbines must be on top of hills, because that is where the wind blows, visible for up to 40 miles. And they require the construction of new pylons marching to the towns; the gas well is connected by an underground pipe.

Unpersuaded? Wind turbines slice thousands of birds of prey in half every year, including white-tailed eagles in Norway, golden eagles in California, wedge-tailed eagles in Tasmania. There’s a video on Youtube of one winging a griffon vulture in Crete. According to a study in Pennsylvania, a wind farm with eight turbines would kill about a 200 bats a year. The pressure wave from the passing blade just implodes the little creatures’ lungs. You and I can go to jail for harming bats or eagles; wind companies are immune.

Still can’t make up your mind? The wind farm requires eight tonnes of an element called neodymium, which is produced only in Inner Mongolia, by boiling ores in acid leaving lakes of radioactive tailings so toxic no creature goes near them.

Not convinced? The gas well requires no subsidy - in fact it pays a hefty tax to the government - whereas the wind turbines each cost you a substantial add-on to your electricity bill, part of which goes to the rich landowner whose land they stand on. Wind power costs three times as much as gas-fired power. Make that nine times if the wind farm is offshore. And that’s assuming the cost of decommissioning the wind farm is left to your children - few will last 25 years.

Decided yet? I forgot to mention something. If you choose the gas well, that’s it, you can have it. If you choose the wind farm, you are going to need the gas well too. That’s because when the wind does not blow you will need a back-up power station running on something more reliable. But the bloke who builds gas turbines is not happy to build one that only operates when the wind drops, so he’s now demanding a subsidy, too.

What’s that you say? Gas is running out? Have you not heard the news? It’s not. Till five years ago gas was the fuel everybody thought would run out first, before oil and coal. America was getting so worried even Alan Greenspan told it to start building gas import terminals, which it did. They are now being mothballed, or turned into export terminals.

A chap called George Mitchell turned the gas industry on its head. Using just the right combination of horizontal drilling and hydraulic fracturing (fracking) - both well established technologies - he worked out how to get gas out of shale where most of it is, rather than just out of (conventional) porous rocks, where it sometimes pools. The Barnett shale in Texas, where Mitchell worked, turned into one of the biggest gas reserves in America. Then the Haynesville shale in Louisiana dwarfed it. The Marcellus shale mainly in Pennsylvania then trumped that with a barely believable 500 trillion cubic feet of gas, as big as any oil field ever found, on the doorstep of the biggest market in the world.

The impact of shale gas in America is already huge. Gas prices have decoupled from oil prices and are half what they are in Europe. Chemical companies, which use gas as a feedstock, are rushing back from the Persian Gulf to the Gulf of Mexico. Cities are converting their bus fleets to gas. Coal projects are being shelved; nuclear ones abandoned.

Rural Pennsylvania is being transformed by the royalties that shale gas pays (Lancashire take note). Drive around the hills near Pittsburgh and you see new fences, repainted barns and - in the local towns - thriving car dealerships and upmarket shops. The one thing you barely see is gas rigs. The one I visited was hidden in a hollow in the woods, invisible till I came round the last corner where a flock of wild turkeys was crossing the road. Drilling rigs are on site for about five weeks, fracking trucks a few weeks after that, and when they are gone all that is left is a “Christmas tree” wellhead and a few small storage tanks.

The International Energy Agency reckons there is quarter of a millennium’s worth of cheap shale gas in the world. A company called Cuadrilla drilled a hole in Blackpool, hoping to find a few trillion cubic feet of gas. Last month it announced 200 trillion cubic feet, nearly half the size of the giant Marcellus field. That’s enough to keep the entire British economy going for many decades. And it’s just the first field to have been drilled.

Jesse Ausubel is a soft-spoken academic ecologist at Rockefeller University in New York, not given to hyperbole. So when I asked him about the future of gas, I was surprised by the strength of his reply. “It’s unstoppable,” he says simply. Gas, he says, will be the world’s dominant fuel for most of the next century. Coal and renewables will have to give way, while oil is used mainly for transport. Even nuclear may have to wait in the wings.

And he is not even talking mainly about shale gas. He reckons a still bigger story is waiting to be told about offshore gas from the so-called cold seeps around the continental margins. Israel has made a huge find and is planning a pipeline to Greece, to the irritation of the Turks. The Brazilians are striking rich. The Gulf of Guinea is hot. Even our own Rockall Bank looks promising. Ausubel thinks that much of this gas is not even fossil fuel, but ancient methane from the universe that was trapped deep in the earth’s rocks - like the methane that forms lakes on Titan, one of Saturn’s moons.

The best thing about cheap gas is whom it annoys. The Russians and the Iranians hate it because they thought they were going to corner the gas market in the coming decades. The greens hate it because it destroys their argument that fossil fuels are going to get more and more costly till even wind and solar power are competitive. The nuclear industry ditto. The coal industry will be a big loser (incidentally, as somebody who gets some income from coal, I declare that writing this article is against my vested interest).

Little wonder a furious attempt to blacken shale gas’s reputation is under way, driven by an unlikely alliance of big green, big coal, big nuclear and conventional gas producers. The environmental objections to shale gas are almost comically fabricated or exaggerated. Hydraulic fracturing or fracking uses 99.86% water and sand, the rest being a dilute solution of a few chemicals of the kind you find beneath your kitchen sink.

State regulators in Alaska, Colorado, Indiana, Louisiana, Michigan, Oklahoma, Pennsylvania, South Dakota, Texas and Wyoming have all asserted in writing that there have been no verified or documented cases of groundwater contamination as a result of hydraulic fracking. Those flaming taps in the film “Gasland” were literally nothing to do with shale gas drilling and the film maker knew it before he wrote the script. The claim that gas production generates more greenhouse gases than coal is based on mistaken assumptions about gas leakage rates and cherry-picked time horizons for computing greenhouse impact.

Like Japanese soldiers hiding in the jungle decades after the war was over, our political masters have apparently not heard the news. David Cameron and Chris Huhne are still insisting that the future belongs to renewables. They are still signing contracts on your behalf guaranteeing huge incomes to landowners and power companies, and guaranteeing thereby the destruction of landscapes and jobs. The government’s “green” subsidies are costing the average small business 250,000 pounds a year. That’s ten jobs per firm. Making energy cheap is - as the industrial revolution proved - the quickest way to create jobs; making it expensive is the quickest way to lose them.

Not only are renewables far more expensive, intermittent and resource-depleting (their demand for steel and concrete is gigantic) than gas; they are also hugely more damaging to the environment, because they are so land-hungry. Wind kills birds and spoils landscapes; solar paves deserts; tidal wipes out the ecosystems of migratory birds; biofuel starves the poor and devastates the rain forest; hydro interrupts fish migration. Next time you hear somebody call these “clean” energy, don’t let him get away with it.

Wind cannot even help cut carbon emissions, because it needs carbon back-up, which is wastefully inefficient when powering up or down (nuclear cannot be turned on and off so fast). Even Germany and Denmark have failed to cut their carbon emissions by installing vast quantities of wind.

Yet switching to gas would hasten decarbonisation. In a combined cycle turbine gas converts to electricity with higher efficiency than other fossil fuels. And when you burn gas, you oxidise four hydrogen atoms for every carbon atom. That’s a better ratio than oil, much better than coal and much, much better than wood. Ausubel calculates that, thanks to gas, we will accelerate a relentless shift from carbon to hydrogen as the source of our energy without touching renewables.

To persist with a policy of pursuing subsidized renewable energy in the midst of a terrible recession, at a time when vast reserves of cheap low-carbon gas have suddenly become available is so perverse it borders on the insane. Nothing but bureaucratic inertia and vested interest can explain it.

By Laura Caroe, Daily Express

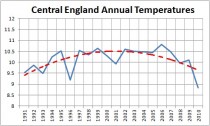

BRITAIN is set to suffer a mini ice age that could last for decades and bring with it a series of bitterly cold winters.

Central England Annual temperatures since 1991

And it could all begin within weeks as experts said last night that the mercury may soon plunge below the record -20C endured last year. Scientists say the anticipated cold blast will be due to the return of a disruptive weather pattern called La Nina. Latest evidence shows La Nina, linked to extreme winter weather in America and with a knock-on effect on Britain, is in force and will gradually strengthen as the year end.

The climate phenomenon, characterised by unusually cold ocean temperatures in the Pacific, was linked to our icy winter last year - one of the coldest on record. And it coincides with research from the Met Office indicating the nation could be facing a repeat of the “little ice age” that gripped the country 300 years ago, causing decades of harsh winters. Britain is set to suffer a mini ice age that could last for decades. (see this PDF that describes the UV as an important ‘amplifying’ factor in the solar impact on climate.

The prediction, to be published in Nature magazine, is based on observations of a slight fall in the sun’s emissions of ultraviolet radiation, which may, over a long period, trigger Arctic conditions for many years.

Although a connection between La Nina and conditions in Europe is scientifically uncertain, ministers have warned transport organisations and emergency services not to take any chances. Forecasts suggest the country could be shivering in a big freeze as severe and sustained as last winter from as early as the end of this month.

La Nina, which occurs every three to five years, has a powerful effect on weather thousands of miles away by influencing an intense upper air current that helps create low pressure fronts.

Another factor that can affect Europe is the amount of ice in the Arctic and sea temperatures closer to home.

Ian Currie, of the Meterological Society, said: “All the world’s weather systems are connected. What is going on now in the Pacific can have repercussions later around the world.”

Parts of the country already saw the first snowfalls of the winter last week, dumping two inches on the Cairngorms in Scotland. And forecaster James Madden, from Exacta Weather, warned we are facing a “severely cold and snowy winter”.

Councils say they are fully prepared having stockpiled thousands of tons of extra grit. And the Local Government Association says it had more salt available at the beginning of this month than the total used last winter.

But the mountain of salt could be dug into very soon amid widespread heavy snow as early as the start of next month. Last winter, the Met Office was heavily criticised after predicting a mild winter, only to see the country grind to a halt amid hazardous driving conditions in temperatures as low as -20C.

Peter Box, the Local Government Association’s economy and transport spokesman, said: “Local authorities have been hard at work making preparations for this winter and keeping the roads open will be our number one priority.”

The National Grid will this week release its forecast for winter energy use based on long-range weather forecasts.

Such forecasting is, however, notoriously difficult, especially for the UK, which is subject to a wide range of competing climatic forces. A Met Office spokesman said that although La Nina was recurring, the temperatures in the equatorial Pacific were so far only 1C below normal, compared with a drop of 2C at the same time last year.

Research by America’s National Oceanic and Atmospheric Administration showed that in 2010-11 La Nina contributed to record winter snowfalls, spring flooding and drought across the world. Jonathan Powell, of Positive Weather Solutions, said: “The end of the month and November are looking colder than average with severe frosts and the chance of snow.” However, some balmy autumnal sunshine was forecast for this week.

By Paul Chesser, Daily Caller

Last week, the Center for Responsive Politics (CRP) released a report on the amount of money that has been spent in the fight over Transcanada Corp.’s proposed Keystone XL pipeline. The pipeline, which would run 1,700 miles from the Canadian tar sands to the Gulf of Mexico, is opposed by environmentalists.

Unfortunately, CRP’s report portrays the fight as a battle between “Big Oil” and poor little environmental activist groups. That couldn’t be further from the truth.

The report quotes Eddie Scher, the senior communications strategist for the Sierra Club. Scher complains that environmental groups can’t compete with the “literally unlimited resources” of energy companies.

“There’s no question we’re up against big numbers of campaign dollars,” he said. “We’re up against the cream of the crop when it comes to K Street lobbyists. But we believe even well-financed insanity is trumped by democracy.”

But the Sierra Club - like other major environmental groups - is by no means poor. At the end of 2009, it had more than $170 million in assets between its activist wing and its education foundation. The Nature Conservancy ended last year with $5.65 billion in assets, after taking in $210.5 million in revenue. The World Wildlife Fund had $377.5 million in assets as of June 2010, after scraping together $177.7 million for the fiscal year. And the National Audubon Society had $305.9 million stashed away at the end of last year. The Environmental Defense Fund, Earthjustice, the Natural Resources Defense Council, and almost every other national “green” group you’ve ever heard of are similarly “impoverished.”

And then there are the foundations - dozens if not hundreds of them - that finance environmental activism. Among their benefactors: the Energy Foundation ($68.6 million in assets), the Joyce Foundation ($773.6 million), the Rockefeller Brothers Fund ($729 million), the William and Flora Hewlett Foundation ($6.8 billion), the David and Lucile Packard Foundation ($5.7 billion), and Heinz Endowments ($1.2 billion).

Scher’s Sierra Club might not spend as much money on lobbying as energy companies do, but that’s by choice. The part of the Sierra Club that is organized under the 501(c)(4) section of the tax code - in other words, the part of the organization that isn’t limited by lobbying restrictions - had nearly $49 million in assets at its disposal at the end of 2009. According to its 2009 tax return, the group spent about $4.9 million on “lobbying and political expenditures.” Only $480,000 of that money was spent at the federal level. The other $4.4 million was spent lobbying at the state level or on political activities like advertisements.

But that’s because the Sierra Club has made a strategic decision to focus more on litigation than on lobbying. The group files, on average, one lawsuit per week.

Other groups with as much financial might, such as the Environmental Defense Fund and the Natural Resources Defense Council, make similar tactical decisions about litigation, lobbying, and other activities. In fact, litigation involves more bullying than lobbying does. There are few things worse in life than dealing with lawsuits.

It’s time for the people at these well-heeled environmental groups to stop whining about how they “can’t compete” with energy companies.

Paul Chesser is executive director of American Tradition Institute.

By Anthony Watts

Senator Inhofe’s EPW office issued a press release today on the subject of USHCN Climate Monitoring stations along with links to this report from the General Accounting Office (GAO)

...the report notes, “NOAA does not centrally track whether USHCN stations adhere to siting standards...nor does it have an agency-wide policy regarding stations that don’t meet standards.” The report continues, “Many of the USHCN stations have incomplete temperature records; very few have complete records. 24 of the 1,218 stations (about 2 percent) have complete data from the time they were established.” GAO goes on to state that most stations with long temperature records are likely to have undergone multiple changes in measurement conditions.

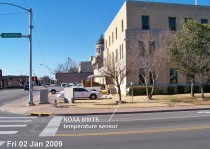

The report shows by their methodology that 42% of the network in 2010 failed to meet siting standards and they have recommendations to NOAA for solving this problem. This number is of course much lower than what we have found in the surfacestations.org survey, but bear in mind that NOAA has been slowly and systematically following my lead and reports and closing the worst stations or removing them from USHCN duty. For example I pointed out that the famous Marysville station (see An old friend put out to pasture: Marysville is no longer a USHCN climate station of record) that started all this was closed just a few months after I reported issues with its atrocious siting. Recent discoveries of closures include Armore (shown below) and Durant OK. This may account for a portion the lower 42% figure for “active stations” the GAO found. Another reason might be that they tended towards using a less exacting rating system than we did.

Recently, while resurveying stations that I previously surveyed in Oklahoma, I discovered that NOAA has been quietly removing the temperature sensors from some of the USHCN stations we cited as the worst (CRN4, 5) offenders of siting quality. For example, here are before and after photographs of the USHCN temperature station in Ardmore, OK, within a few feet of the traffic intersection at City Hall:

Ardmore USHCN station , MMTS temperature sensor, January 2009

Ardmore USHCN station , MMTS temperature sensor removed, March 2011

While NCDC has gone to great lengths to defend the quality of the USHCN network, their actions of closing them speak far louder than written words and peer reviewed publications.

I don’t have time today to go into detail, but will follow up at another time. Here is the GAO summary:

Climate Monitoring: NOAA Can Improve Management of the U.S. Historical Climatology Network

GAO-11-800 August 31, 2011

Highlights Page (PDF) Full Report (PDF, 47 pages) Accessible Text Recommendations (HTML)

Summary

The National Oceanic and Atmospheric Administration (NOAA) maintains a network of weather-monitoring stations known as the U.S. Historical Climatology Network (USHCN), which monitors the nation’s climate and analyzes long-term surface temperature trends. Recent reports have shown that some stations in the USHCN are not sited in accordance with NOAA’s standards, which state that temperature instruments should be located away from extensive paved surfaces or obstructions such as buildings and trees. GAO was asked to examine (1) how NOAA chose stations for the USHCN, (2) the extent to which these stations meet siting standards and other requirements, and (3) the extent to which NOAA tracks USHCN stations’ adherence to siting standards and other requirements and has established a policy for addressing nonadherence to siting standards. GAO reviewed data and documents, interviewed key NOAA officials, surveyed the 116 NOAA weather forecast offices responsible for managing stations in the USHCN, and visited 8 forecast offices.

In choosing USHCN stations from a larger set of existing weather-monitoring stations, NOAA placed a high priority on achieving a relatively uniform geographic distribution of stations across the contiguous 48 states. NOAA balanced geographic distribution with other factors, including a desire for a long history of temperature records, limited periods of missing data, and stability of a station’s location and other measurement conditions, since changes in such conditions can cause temperature shifts unrelated to climate trends. NOAA had to make certain exceptions, such as including many stations that had incomplete temperature records. In general, the extent to which the stations met NOAA’s siting standards played a limited role in the designation process, in part because NOAA officials considered other factors, such as geographic distribution and a long history of records, to be more important. USHCN stations meet NOAA’s siting standards and management requirements to varying degrees. According to GAO’s survey of weather forecast offices, about 42 percent of the active stations in 2010 did not meet one or more of the siting standards.

With regard to management requirements, GAO found that the weather forecast offices had generally but not always met the requirements to conduct annual station inspections and to update station records. NOAA officials told GAO that it is important to annually visit stations and keep records up to date, including siting conditions, so that NOAA and other users of the data know the conditions under which they were recorded. NOAA officials identified a variety of challenges that contribute to some stations not adhering to siting standards and management requirements, including the use of temperature - measuring equipment that is connected by a cable to an indoor readout device - which can require installing equipment closer to buildings than specified in the siting standards. NOAA does not centrally track whether USHCN stations adhere to siting standards and the requirement to update station records, and it does not have an agencywide policy regarding stations that do not meet its siting standards. Performance management guidelines call for using performance information to assess program results. NOAA’s information systems, however, are not designed to centrally track whether stations in the USHCN meet its siting standards or the requirement to update station records. Without centrally available information, NOAA cannot easily measure the performance of the USHCN in meeting siting standards and management requirements.

Furthermore, federal internal control standards call for agencies to document their policies and procedures to help managers achieve desired results. NOAA has not developed an agencywide policy, however, that clarifies for agency staff whether stations that do not adhere to siting standards should remain open because the continuity of the data is important, or should be moved or closed. As a result, weather forecast offices do not have a basis for making consistent decisions to address stations that do not meet the siting standards. GAO recommends that NOAA enhance its information systems to centrally capture information useful in managing the USHCN and develop a policy on how to address stations that do not meet its siting standards. NOAA agreed with GAO’s recommendations.

Recommendations

Our recommendations from this work are listed below with a Contact for more information. Status will change from “In process” to “Open,” “Closed - implemented,” or “Closed - not implemented” based on our follow up work.

Director: Anu K. Mittal

Team: Government Accountability Office: Natural Resources and Environment

Phone: (202) 512-9846

Recommendations for Executive Action

Recommendation: To improve the National Weather Service’s (NWS) ability to manage the USHCN in accordance with performance management guidelines and federal internal control standards, as well as to strengthen congressional and public confidence in the data the network provides, the Acting Secretary of Commerce should direct the Administrator of NOAA to enhance NWS’s information system to centrally capture information that would be useful in managing stations in the USHCN, including (1) more complete data on siting conditions (including when siting conditions change), which would allow the agency to assess the extent to which the stations meet its siting standards, and (2) existing data on when station records were last updated to monitor whether the records are being updated at least once every 5 years as NWS requires.

Agency Affected: Department of Commerce

Status: In process

Comments: When we confirm what actions the agency has taken in response to this recommendation, we will provide updated information.

---------------

Recommendation: To improve the National Weather Service’s (NWS) ability to manage the USHCN in accordance with performance management guidelines and federal internal control standards, as well as to strengthen congressional and public confidence in the data the network provides, the Acting Secretary of Commerce should direct the Administrator of NOAA to develop an NWS agencywide policy, in consultation with the National Climatic Data Center, on the actions weather forecast offices should take to address stations that do not meet siting standards.

Agency Affected: Department of Commerce

Status: In process

Comments: When we confirm what actions the agency has taken in response to this recommendation, we will provide updated information.

--------------------

Vindication for Alan Carlin

Anthony Watts

While the GAO issues a report today saying that the US Historical Climatological Monitoring Network has real tangible problems (as I have been saying for years) the Inspector General just released a report this week saying that EPA rushed their CO2 endangerment finding, skipping annoying steps like doing proper review. The lone man holding up his hand at the EPA saying “wait a minute” was Alan Carlin, who was excoriated for doing so. Read more here.

Icecap comment: Kudos to Anthony and the surfacestation.org team for raising this issue and forcing changes and now more transparency and to Alan Carlin for the courage to speak out from inside an agency on a mission.

By P Gosselin on 26. September 2011

I think I’ve found the root of Joe Romm’s problem. He needs to go back to school and learn more math and natural sciences! At least that’s what a recent Yale University study shows.

Somehow this paper got by me. Maybe this is old news, and so forgive me if this is already known. It’s nothing you’d hear about from the “enlightened” media, after all.

Recall how climate alarmists always try to portray skeptics as ignorant, close-minded flat-earthers who lack sufficient education to understand even the basics of the science, and if it wasn’t for them, the world could start taking the necessary steps to rescue itself.

Unfortunately for the warmists, the opposite is true. The warmists are the ones who are less educated scientifically. This is what a recent Yale University study shows. Hat tip: http://www.politik.ch.

Professor Dan M. Kahan and his team surveyed 1540 US adults and determined that people with more education in natural sciences and mathematics tend to be more skeptical of AGW climate science. Of course this means that people will less education are more apt to be duped by it.

Surprised? Here’s an excerpt of the study’s abstract (emphasis added):

The conventional explanation for controversy over climate change emphasizes impediments to public understanding: Limited popular knowledge of science, the inability of ordinary citizens to assess technical information, and the resulting widespread use of unreliable cognitive heuristics to assess risk. A large survey of U.S. adults (N = 1540) found little support for this account. On the whole, the most scientifically literate and numerate subjects were slightly less likely, not more, to see climate change as a serious threat than the least scientifically literate and numerate ones.

Time for you warmists to go back to school (though I seriously doubt many of you are capable of learning much of anything, on account of extreme cultural cognition disability).

To learn more, here’s a video on Cultural Cognition and the Challenge of Science Communication which looks at risk perception w.r.t. the issues of climate change, and here’s a video on Cultural Cognition Hypothesis.

By Verity Jones, Digging in the Clay

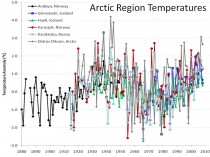

A Tamino rant aimed at Joe D’Aleo’s Arctic ice refreezing after falling short of 2007 record (also at ICECAP) has had me smiling. Tamino’s accusation against Joe of cherry picking are centred on one of the graphs originally posted here at DITC.

“D’Aleo tries so hard to blame Arctic climate change on ocean oscillations. Part of his dissertation includes a plot of “Arctic Region Temperatures”

“Do you suspect that these six stations were “hand-picked” to give the impression he wanted to give? Do you think maybe they were cherry-picked? If so, you’d be right.”

Well excuse me but of course they were cherry-picked, but not for the reasons Tamino suggests. If you really want to spit cherry stones, Tamino, chew on them first.

The graph was originally posted here and here’s what I said about it then:

“Tony had found many climate stations all over the world with a cooling trend in temperatures over at least the last thirty years…

We were concerned that this could be seen as ‘cherrypicking’...In many cases it was not just cherrypicking the stations, but also the start dates of each cooling trend.”

However, the story the post revealed wasn’t the one Tony wanted to tell from the original reason why the stations were chosen - the story that came out of the work was the unexpected (to us) cyclical pattern exhibited by so many of the stations across the world. The pattern matched more closely in regional stations - hence the closely grouped Arctic set in the graph above. So no, the stations in the graph weren’t meant to represent the whole of the Arctic (the original presentation of the graph is here).

But while we’re at it let’s look at a few more stations.

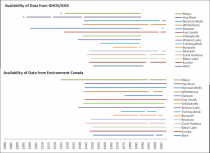

One of the reasons for choosing the stations we did in the graph above was the longevity of the record. This was something I had a look at in the Canadian Arctic too when comparing GHCN/GISS data and that of Environment Canada.

Tamino also berates Joe for not averaging/spatially weighting the data:

“He wants you to think that Arctic regional temperature was just as hot in the 1930s-1940s as it is today.”

If we want a simple comparison of the 1930s with the present we need stations that cover both time periods. In GHCN v2 for Canada, only one station (Fort Smith) has data in 2009 and also has data prior to 1943. Now Fort Smith is more than 1C warmer on average in the five years 1998-2002 than in 1938-1942, but if we look at Mayo in the Environment Canada data set, it is only 0.275C warmer in recent times when comparing averages of the two periods.

These are just two stations but such differences intrigue me. If you don’t compare like with like, how can you be sure there is no inadvertent bias? Are we comparing apples in the 1930s/40s with oranges in the 1990s and 2000s?

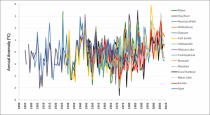

If we plot all the (GHCN/GISS) data (yes it’s another one of those ‘awful’ spaghetti graphs ;-0) - look at that big white gap under the plots from 1937-1946.

Those years look pretty warm compared to 2000-2010, but unfortunately the data for Hay River, Mayo and Dawson does not extend to recent times. To do a comparison, you need to plot GISS and Environment Canada data together, and (as I showed here) there is a bit of a mismatch that needs to be overcome. In Dawson the 1940s are warmer; Hay River shows a slow continuous upward trend.

If you want to compare the two periods in Canada, unfortunately you mostly have to rely on combining stations, and methods for this are well documented (I’ll not go into detail here). What is still debated though is the magnitude of correction (if any) for urban warming.

Much as scientists are required to be objective, there is a need for subjectivity in looking at the surface temperature records. What has changed around this station? Why is one station producing a cyclical signal while another gives a near linear trend? Like I’ve said before, I’m a fan of a parallax view.

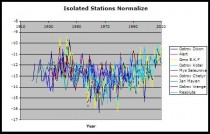

Canadian Arctic stations are mostly rural and very small settlements; they’re labelled as <10,000 population by GISS. Analysis by Roy Spencer showed the greatest warming bias associated with population density increases at low population density. Ed Caryl in A Light in Siberia compared “isolated stations” with “urban” where there was a possible influence from human activity. He found a distinct warming trend in temperatures of “urban" stations where there was increasing evidence of manmade structures or heat sources. In contrast, he found little or no trend in ”isolated stations’. Normalising the data for the isolated stations, he too produced a ‘spaghetti’ graph, which, lo and behold, also shows up that cyclical variation. Not only that, but for a lot of these stations (go ahead - call them cherry picked if you wish), the 1940s are clearly warmer than recent times.

Enlarged

Graph from No Tricks Zone.

So it is very clear to me that, in comparing station data, we’re dealing with cherries, apples, oranges, and probably a whole fruit bowl. Banana anyone? The problem is that Tamino and others insist on mixing it all up to make a smoothie. Now that’s OK as long as you like bananas. See post here.

Icecap Note: I want to thank Verity for taking time to respond to Tamino. I am preparing my response (preliminary notes here) back to Tamino by examining more stations in the arctic and reiterating my claims and those from the NOAA NSIDC in 2007, JAMSTEC, IARC and Frances at Rutgers that the Pacific and Atlantic played a role in arctic temperatures and ice cover in multidecadal cyclical ways and evidence from Soon these cycles may be solar driven. Tamino focused on the one graph mentioned of the dozen presented in the original post is a frantic attempt to discredit natural variability which prompted Verity’s response. Steve Goddard has shown here that there are many anecdotal stories about declining ice and arctic warming during the prior warm period. More to come.